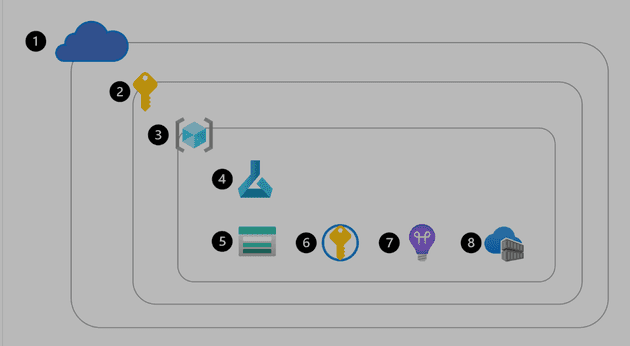

Resource creation:

-

Get access to Azure, for example through the Azure portal.

-

Sign in to get access to an Azure subscription.

-

Create a resource group within your subscription.

-

Create an Azure Machine Learning service to create a workspace.

When a workspace is provisioned, Azure will automatically create other Azure resources within the same resource group to support the workspace:

-

Azure Storage Account: To store files and notebooks used in the workspace, and to store metadata of jobs and models.

-

Azure Key Vault: To securely manage secrets such as authentication keys and credentials used by the workspace.

-

Application Insights: To monitor predictive services in the workspace.

-

Azure Container Registry: Created when needed to store images for Azure Machine Learning environments.

workspace:

Top level container for managing infrastructure and to train and deploy model, tracks the training record making it reproducible and robust. Contain all logs, metrics, outputs, models, and snapshots of your code in the workspace.

5 Types of compute

To improve portability, usually create environments in Docker containers that are in turn hosted on compute targets,

- Compute instancecs (for development, i.e Jupyter notebook)

- Compute cluster (automatically scalable)

- AKS cluster (highly scalable distributed compute, production senario)

- Attached compute (Synapse analytics)

- Serverless compute (fully managed by Azure on demand compute)#

Datastore:

Workspace don't contain data, rather it connect to different data sources via reference known as datastore. The connection information to a data service that a datastore represents, is stored in the Azure Key Vault.

Assets (not data asset):

Assets created and used at various stages of a project and include: Models, Environments, Data, Components

- Model: register a model in the worksp to persist model binary filesace specify the name and verision.

- Environments: Specifies the software packages, environment variables, and software settings to run scripts. An environment is stored as an image in the Azure Container Registry created with the workspace when it's used for the first time. Consistent, reusable runtime contexts for your experiments - regardless of where the experiment script is run.

Datastore & Data assets:

Datastores contain the connection information to Azure data storage services, data assets refer to a specific file or folder.

Data assets: You can use data assets to easily access data every time, without having to provide authentication every time you want to acces it. When you create a data asset in the workspace, you'll specify the path to point to the file or folder, and the name and version.

Components: Snippets of reusable code, use components when creating pipelines.

3 ways to train models:

- Use Automated Machine Learning: Automated Machine Learning iterates through algorithms paired with feature selections to find the best performing model for your data.

- Run a Jupyter notebook: Runs on compute instances, stored in Azure storage account ideal for development and

- Run a script as a job: (Production ready)

- Command: Execute a single script.

- Sweep: Perform hyperparameter tuning when executing a single script.

- Pipeline: Run a pipeline consisting of multiple scripts or components.

When you submit a pipeline you created with the designer it will run as a pipeline job. When you submit an Automated Machine Learning experiment, it will also run as a job.